Oct 25, 2024

Impersonator Accounts: A Bigger Threat Than Deepfakes in 2024 Election - Harris vs. Trump

By Holly Elliott

As the 2024 election approaches and the polls remain tight, the nation's attention turns to predicting the outcome. In the midst of this, we’ve been analyzing the unauthorized content emerging around each candidate. This is a growing concern, as these manipulations can distort public perception and influence the political landscape.

Here's what we found looking at the most recent data from this month (October 1-21, 2024) on Kamala Harris and Donald Trump.

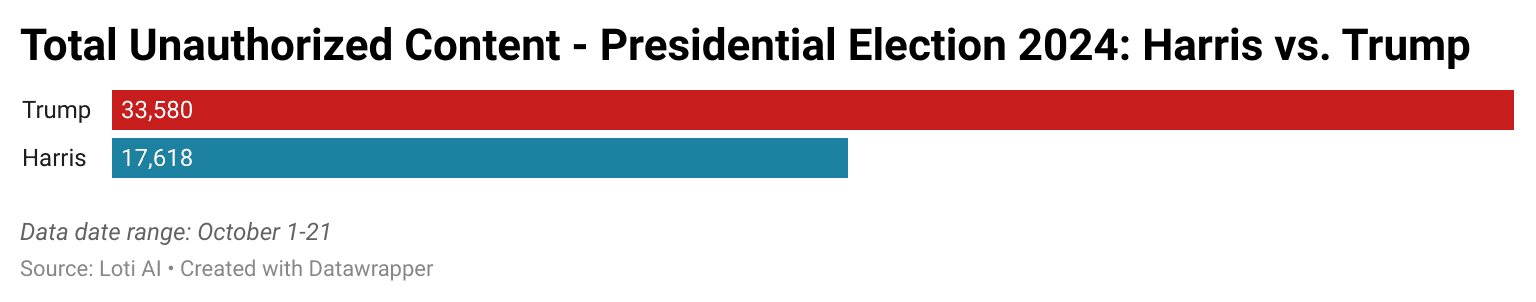

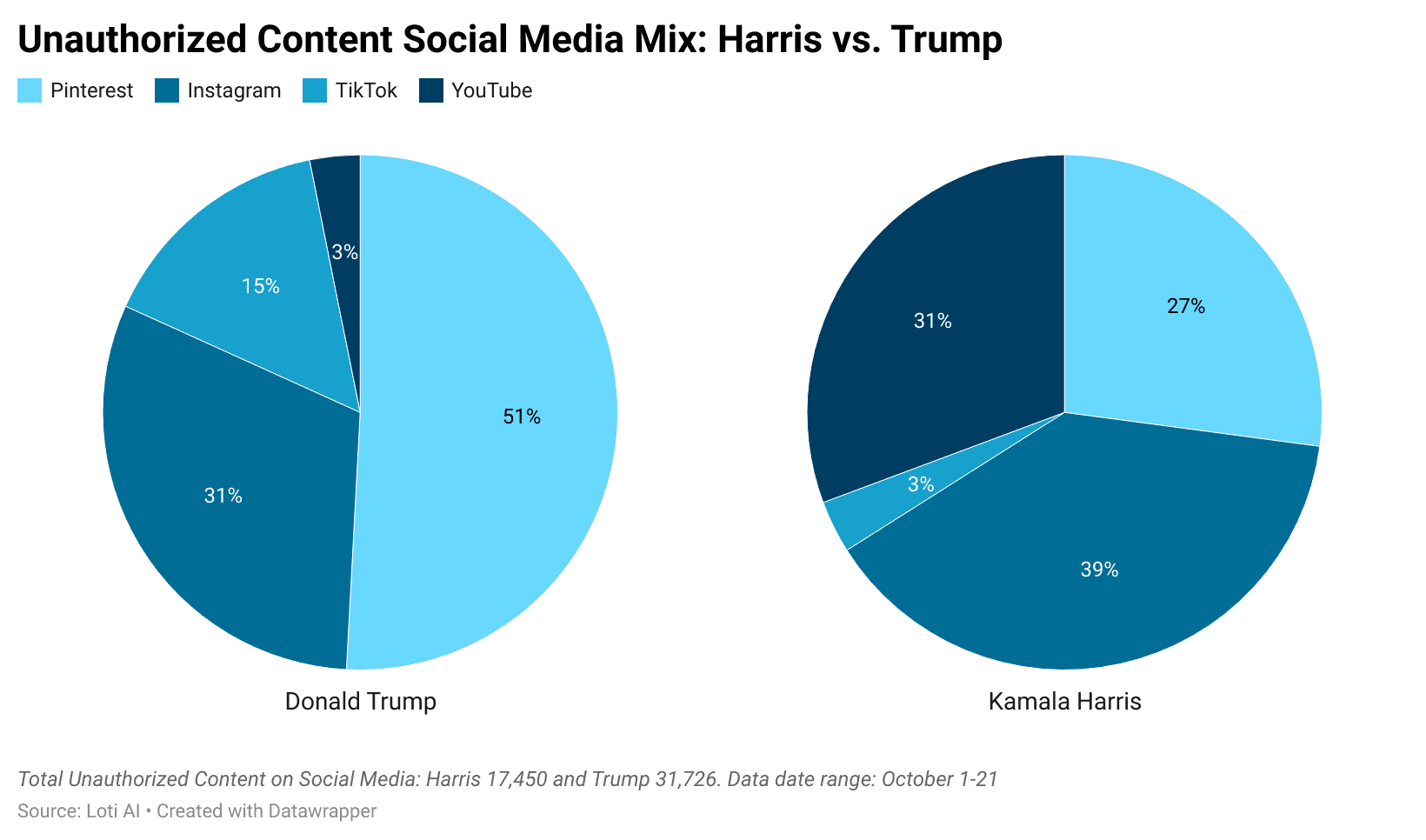

Trump overall faces almost double the amount of unauthorized content compared to Harris, with 33,580 cases to her 17,618. However, Harris faces a much larger issue on YouTube, where she encounters five times more unauthorized content than Trump. This notable outlier highlights platform-specific dynamics in how both candidates are targeted online.

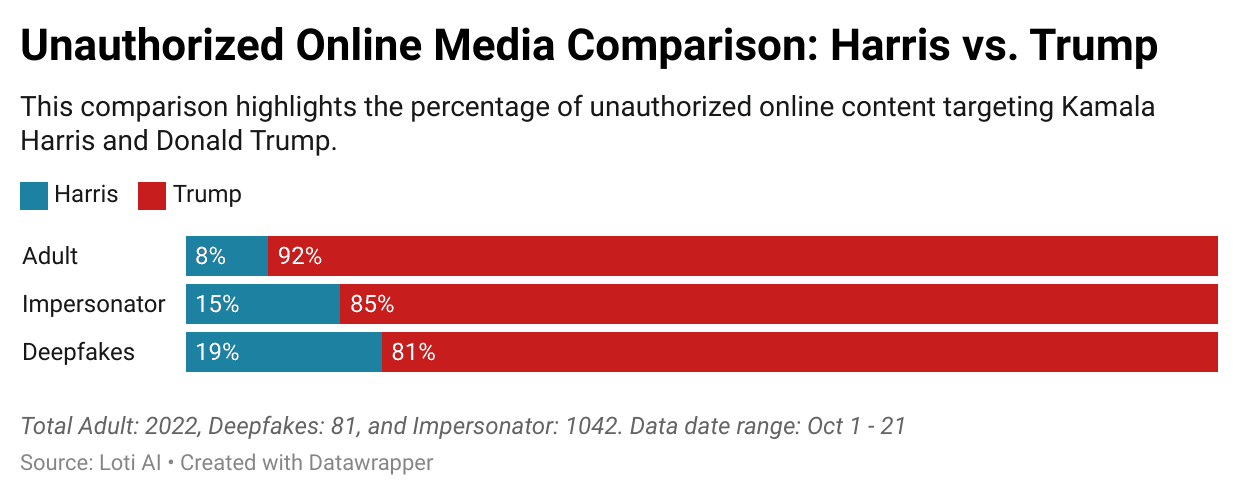

Impersonator accounts far exceed the number of deepfakes for both Trump and Harris. Highlighting that impersonator accounts pose a higher risk to candidates as it relates to public perception and the spread of misinformation. Impersonator accounts for both candidates are most frequently found on TikTok with 83% of Trump's impersonator accounts being on TikTok and 72% for Harris.

Unauthorized adult site content for both candidates is higher than their impersonator and deepfakes instances. However, Trump sees more than 2x the adult instances, while Harris sees almost an even split between adult and impersonator content.

“The data on Harris and Trump underscores the growing threat of unauthorized content and the unchecked spread of misinformation online. While 'deepfakes' may be the term that gets the most attention, the reality is that impersonator accounts and fake adult content are far more prevalent and damaging.” – Luke Arrigoni, Founder and CEO of Loti AI

As the 2024 election season intensifies, the data reveals a clear and concerning trend: the battle over online content is less about flashy deepfakes and more about the subtler, yet far more pervasive, threats of impersonator accounts and adult content. These forms of unauthorized media not only harm the candidates but also have the potential to mislead and confuse voters. Addressing this issue will be crucial in protecting the integrity of both the candidates' reputations and the democratic process itself. As online platforms continue to evolve, so must our efforts to combat this growing digital threat.

About Loti AI:

At Loti AI, we specialize in protecting major celebrities, public figures, and corporate intellectual property from the ever-growing threats of unauthorized content online. Founded in 2022, our focus is on combating deepfakes and impersonations, offering services like likeness protection, content location and removal, and contract enforcement. Our coverage spans the entire public internet, including social media platforms and adult sites.

Loti AI Methodology:

We use advanced, proprietary detection tools, such as face and voice recognition models, to systematically gather data on instances where these individuals' likenesses are misused. Our systems scan social media platforms, video-sharing websites, and adult content domains to detect manipulated media falling into three primary categories: social media appearances, impersonations, and deepfakes, including explicit content. A sample of all detected instances were verified through manual review to ensure accuracy, filtering out false positives and categorizing the content appropriately.

Loti’s systems for detection run on a 24-hour cycle and no preference is given to any particular day of the week, or hour in the day. It takes roughly 24-hours to process 24-hours worth of content. A day is considered on a UTC timezone.

A social media account is considered an imposter if the name and image match, first person language is used and certain verification symbols are present to convince the viewer of authenticity where none exist.

An image or video is counted in the adult category if it appears on a domain with a history of positing majority adult content. Given that context can matter to whether something is considered explicit, we sum all assets found on these domains into the same category regardless of context.